Why DataFramer

Puneet Anand

Sun Jan 26

How DataFramer stands out

When teams evaluate synthetic data platforms, the first demo often looks similar: upload a file, get “more data.” The differences show up later, when you need synthetic data that is measurable, repeatable, and fit for a specific downstream use like evaluation, benchmarking, red-teaming, edge case simulation, or even model training.

DataFramer is built for that second phase. We focus on three things that tend to decide whether synthetic data actually ships:

- Supporting the real shapes your data takes, including long documents and multi-file records with complex, real-life patterns of structured and unstructured data

- Giving you control that is specific enough to be useful, not just useless “variation”

- Making quality measurable through built-in evaluations, revisions, model selections, and optional expert review

At a glance

| Differentiator | What you get in practice | Why it matters |

|---|---|---|

| Multi-format, multi-structure generation | Generate tabular, short text, long-form documents, and multi-file or multi-folder samples, not just rows in a single table | Modern AI systems depend on mixed data shapes, and synthetic data needs to match that reality |

| Spec-first workflow | A reusable specification you can edit and version, plus generation that stays aligned with the spec | Teams can reproduce results, hand off specs across roles, and create controlled dataset variants using both UI and API |

| Long-form quality workflow | Long-sample generation designed around outlines, drafting, and revision cycles | Real-world data is not limited to specific token range. DataFramer can generate documents beyond 50K tokens very easily |

| Built-in evaluations you can operationalize | Evaluation runs as a first-class artifact with reporting, plus the ability to query results in a chat | Synthetic data is only useful if you can measure fitness for your downstream task |

| Seed-light (few samples) and seedless (no samples) options | Start from a handful of examples, or generate from requirements when samples are not accessible | You can begin earlier, especially in privacy-constrained projects where real data access is limited |

| Optional human expert review | Human labeling and optional expert reviews for quality checks in high-stakes domains | Some use cases need a human guarantee for correctness, safety, or auditability |

| Red teaming as part of the same system | Generate adversarial and edge-case suites for prompt injection, jailbreaks and other robustness checks | Red-teaming is more effective when it is systematic and repeatable |

| Long-text study results (same LLM, different outcomes) | In our Claude Sonnet 4.5 study, the DataFramer workflow produced stronger long-form outputs than baseline prompting on diversity, style fidelity, length, and overall quality | This shows the practical value of scaffolding, evaluations, and revision loops, even when the underlying model stays the same |

What “good synthetic data” means, and how DataFramer helps

Most synthetic data projects succeed when you can answer three questions clearly:

- What does “correct” look like for this dataset and use case?

- Can we generate data that stays consistent with that definition?

- Can we measure it quickly, and iterate when it misses?

Let’s dive deeper into DataFramer’s differntiators.

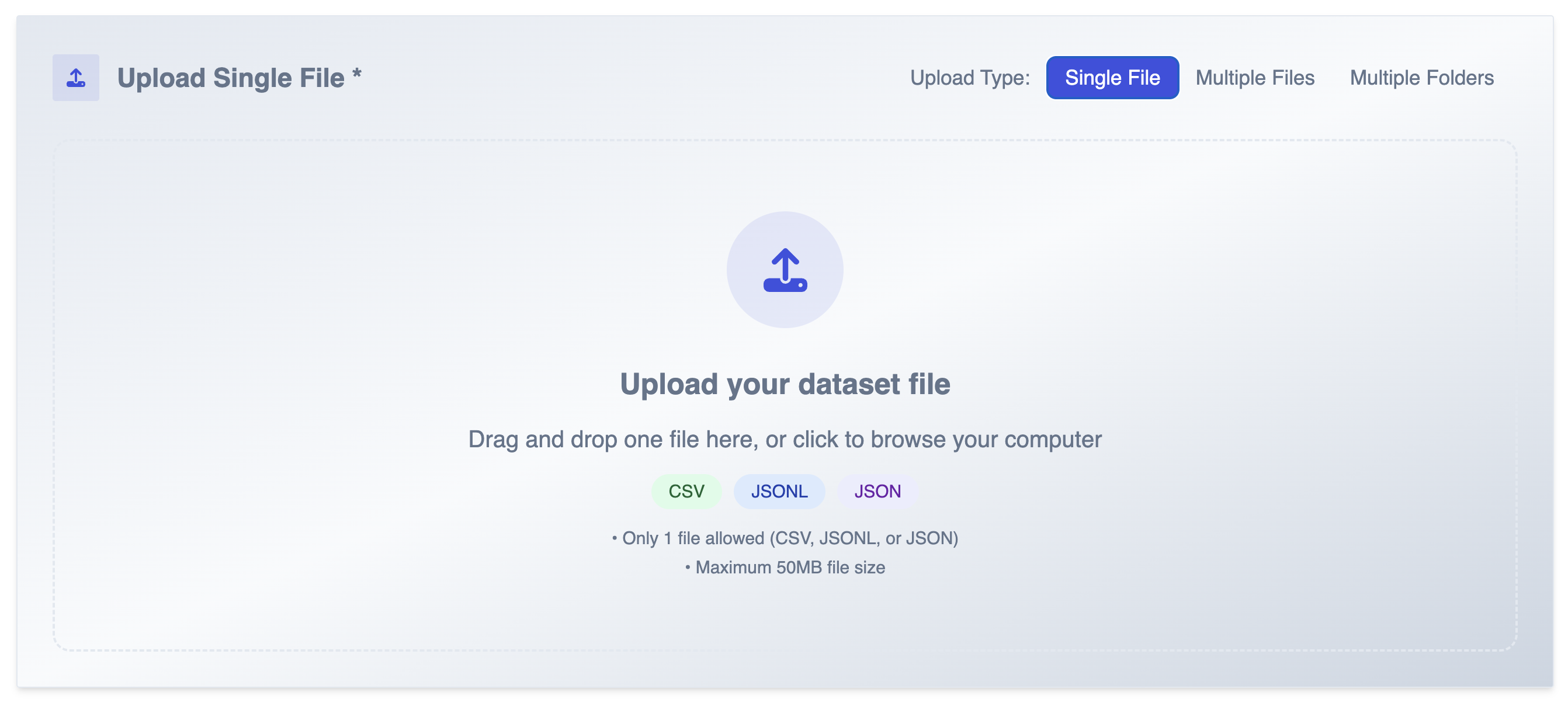

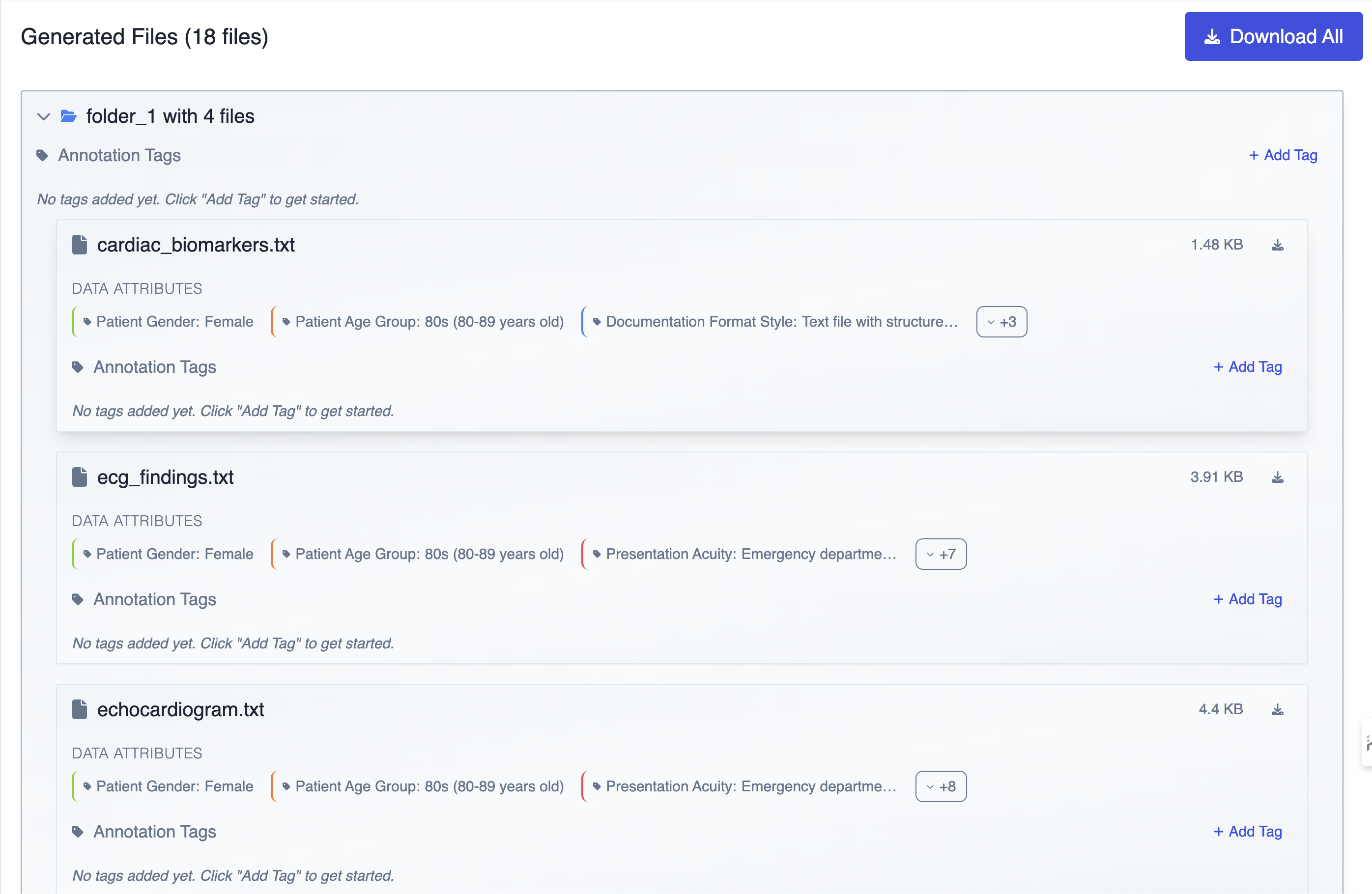

1) Multi-scenario generation across real data formats

Many organizations need synthetic datasets that look like production edge-cases, not simplified examples. That can include long documents, multi-turn conversations, mixed structured and unstructured data, and multi-file records.

DataFramer treats “a sample” as the unit you care about, whether that is a row, a file, or a folder. This helps when your use case involves bundled artifacts rather than single records.

DataFramer uniquely generates multi-file samples and long-form documents

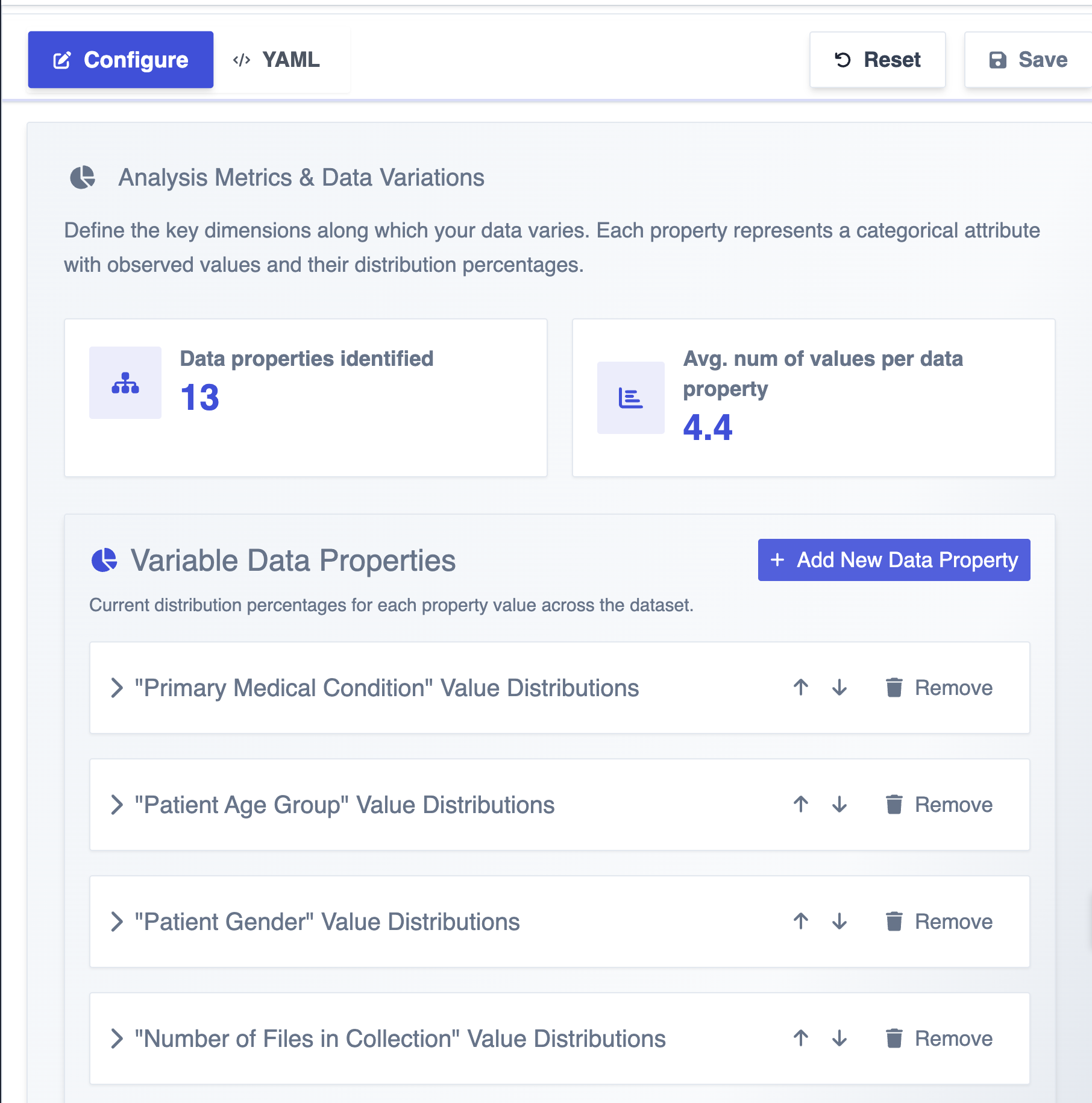

2) Real Controllability

“Control” is only valuable if it helps you create the dataset variants you actually need: higher edge-case density, specific distributions, constrained fields, and consistent outputs across versions.

DataFramer emphasizes control across requirements, distributions, and workflow configuration, including choosing different models for different roles in the pipeline.

While most tools give control over schema and relationships, DataFramer elevates that to include objectives, properties and probability distributions, conditional distributions, model selection, and algorithm choice

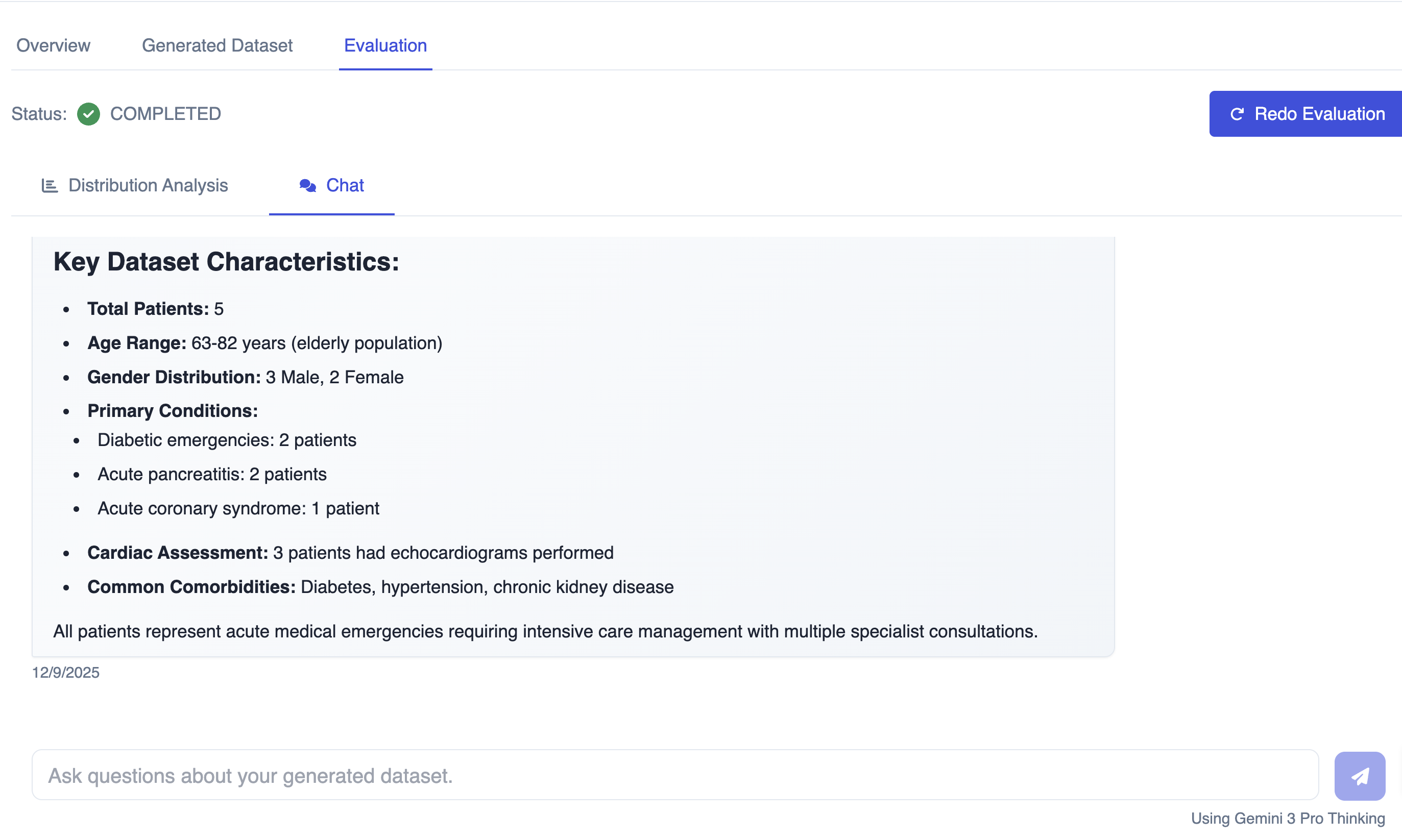

3) Built-in evaluations and reporting for fitness-for-use

Synthetic data should not be evaluated by “it looks realistic.” What matters is fitness for a specific downstream use: does it improve evaluations or expose weaknesses during testing? Does it match the expected distributions? Does it allow you to chat with your generated data?

DataFramer includes built-in evaluation and reporting workflows so teams can measure quality, compare dataset versions, and diagnose failures quickly.

DataFramer includes built-in evaluation and reporting workflows so teams can measure quality, compare dataset versions, and diagnose failures quickly.

Not all Synthetic Data generation tools offer built-in evaluations and fitness-for-use reports.

4) DataFramer supports seed-light (low number of seed samples) and seedless generation when data access is hard

A common bottleneck is that teams cannot access many real samples due to privacy restrictions, contracts, or internal governance. DataFramer supports seed-light workflows and can also work from requirements when seeds are unavailable.

This is especially useful for early-stage projects where you want to start benchmarking and testing before full data access is granted.

In comparison, most Synthetic Data generation tools needs 100s of samples.

5) Fairness and rare edge-case simulation

Two problems show up repeatedly in production systems: skewed coverage and missing tails. Teams may need to increase representation, simulate rare events, and stress systems with adversarial conditions.

Most Synthetic Data Generation tools don’t include Fairness-aware generation controls, Bias testing / red-teaming, and Rare edge-case simulation

6) Scale and integration without complexity

A synthetic data tool has to work in two modes: fast interactive exploration, and reliable scaled generation. DataFramer supports both via UI plus a REST API designed for programmatic workflows.

DataFramer is resilient enough for long running jobs (think hours and days).

A non-technical domain expert can author a specification with desired distributions and requirements, and then pass it to a developer lead to use repeatably through an API.

A concrete example: DataFramer datasets powering HDM-2 and HDM-Bench

DataFramer is built by the same team behind AIMon Labs research on hallucination detection. We used DataFramer to create and scale datasets that supported the development of the HDM-2 small language model for hallucination detection and the HDM-Bench benchmark dataset.

In the reported results, the 3B parameter HDM-2 model outperformed zero-shot prompted GPT-4o and GPT-4o-mini on TruthfulQA and HDM-Bench, and achieved state-of-the-art performance on RagTruth compared to prompt baselines using gpt-4-turbo. The main point for synthetic data practitioners is that careful dataset design and repeatable evaluation can meaningfully change outcomes, even against large frontier systems.

A customer’s story: An InsurTech AI company scaling Life, Health, and P&C underwriting AI without sharing sensitive customer data

Insurance underwriting data is some of the hardest data to work with. It is sensitive, customer-owned, and often includes PII and PHI. That creates a practical constraint: you cannot easily reuse production data across engineering, product, design, and go-to-market workflows, even when everyone is working on the same AI system.

Even more so, this situation results in stalled POCs with customers who in turn can’t hand over their customer data to vendors.

One InsurTech AI company we worked with ran into exactly this problem as they expanded across multiple lines of business and enterprise customers.

They needed datasets that were realistic enough to drive rapid feature testing, stable enough for regression testing, and safe enough to use broadly inside the company and with external partners during pilots.

DataFramer generated insurance submissions and EHRs (patient histories, encounters, labs, journeys, etc) that were reviewed by MDs and EMTs.

Comparing DataFramer and Claude Sonnet 4.5 for long-text generation

Long-form synthetic text is where “just prompt it” breaks down. Even strong models can produce outputs that shrink in length, drift in style, or become repetitive across runs.

In our study comparing baseline prompting with Claude Sonnet 4.5 versus the DataFramer workflow using the same underlying model, we saw a clear pattern: when you add structure, controlled diversity, and revision loops, long-form outcomes improve significantly. That is not a claim about one model being universally better than another. It is an operational lesson about systems: the generation workflow matters as much as the model when you need consistent long-form results.

If long-form data (anything above 10K tokens per sample) is part of your roadmap, here is a useful way to evaluate data generation platforms: ask them to generate long documents from the same seeds, measure diversity and fidelity, and check how well the workflow corrects failures without manual babysitting.

Link: DataFramer vs. Claude Sonnet 4.5

Resources

- DataFramer Documentation: https://docs.dataframer.ai/

- Complete Workflow Guide: https://docs.dataframer.ai/workflow

- DataFramer vs Claude Sonnet 4.5 Long-text generation study: https://www.dataframer.ai/posts/long-text-generation-dataframer-vs-baseline/

- HDM-2 on Hugging Face: https://huggingface.co/AimonLabs/hallucination-detection-model

- HDM-Bench on Hugging Face: https://huggingface.co/datasets/dataframer/HDM-Bench

"We strive to start each relationship with establishing trust and building a long-term partnership. That is why we offer a complimentary dataset to all our customers to help them get started."

Ready to Get Started?

Contact our team to learn how we can help your organization develop AI systems that meet the highest standards.