How to Generate 50K-Token Documents: Same LLM, Different Results

TL;DR We compared Dataframer vs raw Claude Sonnet 4.5 for long-form text; Dataframer overwhelmingly won on diversity, style fidelity, length, and quality.

Alex Lyzhov

Mon Jan 12

Summary

We generated 50K-token documents using the same frontier LLM and got dramatically different results. In the baseline, outputs collapsed into short, repetitive “summary essays”. With Dataframer’s scaffold, we consistently got full-length, style-faithful documents across 4 long-text datasets.

What surprised us most (real examples):

- Real Estate: baseline repeated “Zoning” 8 times; Dataframer produced 15 distinct topics from 5 inputs.

- Gutenberg: baseline reused the same plot loop; Dataframer generated genuinely varied stories with strong prose.

- Wiki Medical: baseline got shorter and added unwanted Markdown; Dataframer stayed long and encyclopedic.

Read on for:

- The exact evaluation setup (blind, Gemini 3 Pro)

- The three failure modes (mode collapse, style drift, length shrinkage) and how scaffolding prevents them

- Full outputs + scripts for reproducing our results

Introduction

Long-form synthetic text generation (10K-100K tokens per sample) is critical for LLM evaluation and training - 2026 LLMs routinely operate with very large prompts and extended chat or agentic traces. This is a challenging domain where maintaining coherence, diversity, and stylistic fidelity matters enormously.

Raw LLMs struggle here: autoregressive generation commits to tokens without lookahead, can’t revise earlier sections, and tends to drift or repeat as context grows. Long texts require global coherence that single-pass generation can’t guarantee. You need intermediate representations (outlines, state tracking), iterative search and editing, verification loops, and revision procedures. In short, sophisticated scaffolding.

What is Dataframer?

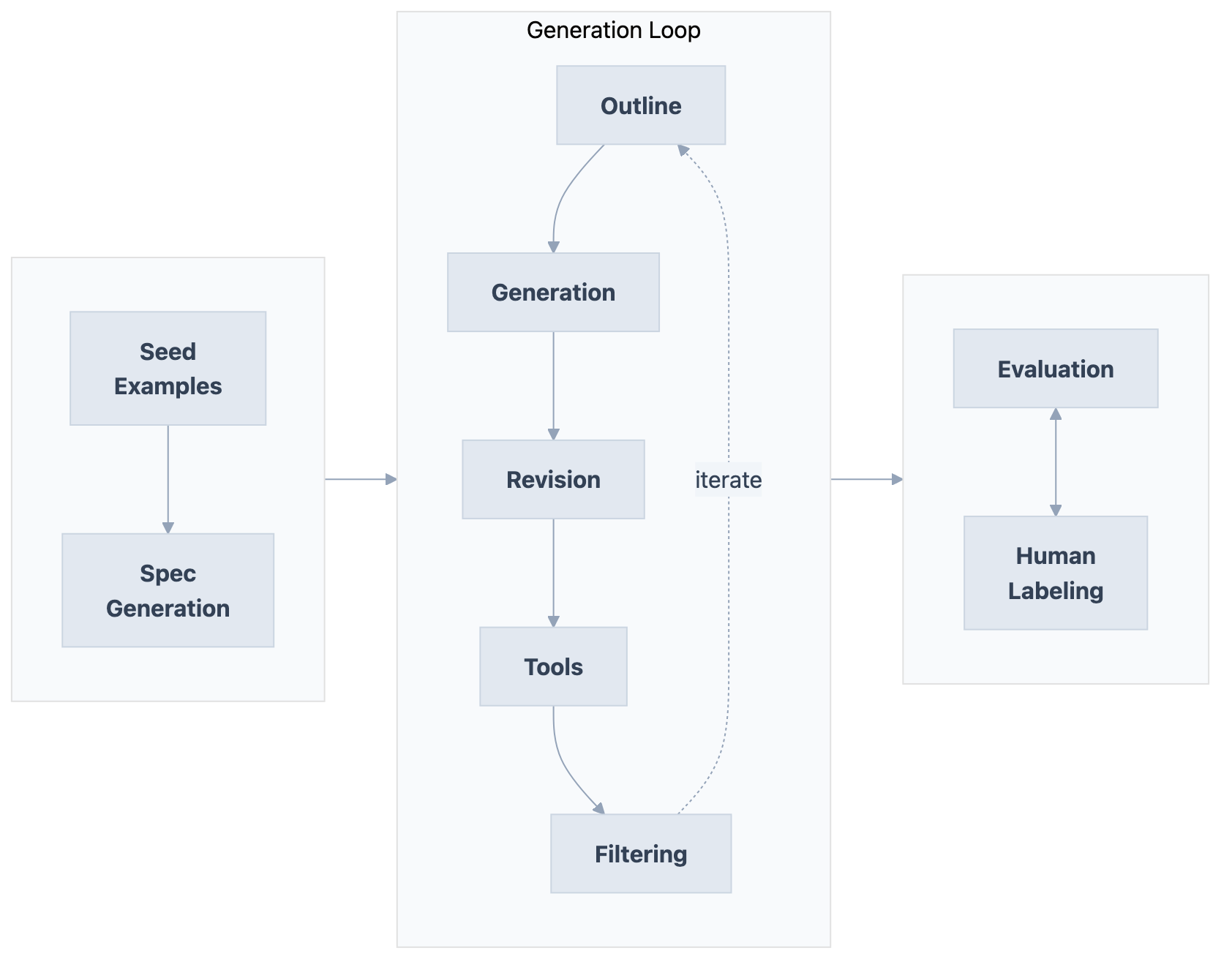

Dataframer is a platform for generating high-quality synthetic datasets at scale. You provide example data, and it generates new samples matching the same patterns, distributions, and structure. Under the hood, AI analyzes your seeds to create a specification capturing data properties and distributions, then runs generation with outlining, iterative evaluation loops and revision cycles - exactly the intermediate representations and quality controls that raw prompting lacks. For more details, see our documentation.

Experiment Setup

Datasets

We manually collected 4 datasets with long texts as seeds for style and formatting conditioning:

| Dataset | Source | Number of Samples | Sample Length | Description |

|---|---|---|---|---|

| Wikisource | Download | 2 texts | 35k-50k tokens | ”Results and Prospects” (Trotsky) + “The Time Machine” (H.G. Wells) |

| Gutenberg | Download | 2 texts | 45k-50k tokens | ”The Call of the Wild” (Jack London) + “The Time Machine” (H.G. Wells) |

| Wiki Medical | Download | 2 articles | 25k-30k tokens | ”Healthcare in Canada” + “History of Medicine” |

| Wiki Real Estate | Download | 5 articles | 5k-15k tokens | NIMBY, Real Estate Economics, Intellectual Property, Property Management, REITs |

Generation Protocol

We generated up to 15 samples for each dataset. Generation followed the standard flow and was almost completely hands-off:

- Load seed data

- Generate a spec using Claude Sonnet 4.5 - a blueprint capturing the structure, patterns, and requirements of your data

- Make minimal spec edits (see below)

- Generate samples

The spec edits were trivial - we only made changes in 2 places across all 4 datasets. See the before/after specs:

| Dataset | Generated Spec | Edited Spec | Change |

|---|---|---|---|

| Wiki Medical | spec | (same) | No changes |

| Wikisource | before | after | Removed last sentence about length (platform determines length automatically) |

| Wiki Real Estate | spec | (same) | No changes |

| Gutenberg | before | after | Changed “from” to “resembling those from” (we want new fiction, not reproductions) |

There was no cherrypicking: we did not select datasets where Dataframer performs well, nor make algorithm changes for these datasets. All seed datasets, generation specs, and scripts are included for reproducibility.

Baseline

As of January 2026, to the best of our knowledge, we have not identified a commercially available system that provides comparable general-purpose generation of diverse long-form texts. Therefore, we compare against a raw frontier LLM baseline: Claude Sonnet 4.5 with low reasoning mode (1024 tokens of reasoning). Importantly, Dataframer uses the same model for all its internal roles (outlining, generation, filtering, revision). The only difference between the two methods is our agentic scaffold.

Evaluation Methodology

We designed evaluation to be maximally fair:

- Systems anonymized as “System 1” (Dataframer) and “System 2” (baseline Claude Sonnet 4.5), same number of samples for each

- Independent evaluator from a different model family: Gemini 3 Pro Preview with high reasoning mode

- Evaluator received all samples from both systems in one context window and compared them across 7 dimensions: Diversity, Style Distribution Matching, Length, Quality, Artifacts, Validity, and Overall Assessment

Results

At a Glance

| Dataset | Dataframer | Baseline (Sonnet 4.5) |

|---|---|---|

| Wikisource | Full novellas with compelling plots, authentic period voices | Only produced dry essays, ignored fiction entirely |

| Gutenberg | Superb prose quality, massive creativity | Plot loop - same expedition story repeated |

| Wiki Real Estate | 15 unique topics from 5 inputs, perfect style match | 8x “Zoning”, 4x “Land Value Tax” |

| Wiki Medical | Long-context coherence, encyclopedic depth | Too short, added unwanted Markdown formatting |

The key insight: same model (Claude Sonnet 4.5), same seeds - the only difference is Dataframer’s agentic scaffold.

Deep Dive: Wikisource

| Criterion | Dataframer | Sonnet 4.5 Baseline |

|---|---|---|

| Diversity | Exceptional - political treatises, epistolary novels, sci-fi, utopias. Creatively merges both inputs. | Very low - nearly all dry expository essays. No fiction, no dialogue. Repetitive titles. |

| Style Distribution | Matches both input styles. Reproduces Wikisource formatting (nav arrows, metadata). Authentic period voices. | Fails - homogenizes everything into generic “academic” voice. |

| Length | Massive long-form content - full novellas with Preface to Epilogue structure. | Short-medium essays, summary-based, lacking depth. |

| Quality | Extraordinary - compelling plots, character arcs, authentic world-building. | Mediocre - reads like undergraduate summaries. |

| Artifacts | Intentionally reproduces Wikisource artifacts (nav links, page numbers). | Strips all formatting. |

| Validity | High - historically grounded, internally consistent. | Moderate - logically sound but platitudinous. |

Winner: Dataframer (vastly superior)

The other three datasets showed consistent patterns: Dataframer maintained diversity and style fidelity while the baseline collapsed into repetitive outputs (Gutenberg: same plot structure repeated; Wiki Real Estate: 80% duplicate topics) and introduced unwanted formatting changes.

Full Evaluation Details

Evaluation summaries and full reports:

- Wikisource: Summary | Full

- Gutenberg: Summary | Full

- Wiki Real Estate: Summary | Full

- Wiki Medical: Summary | Full

All generated outputs (both Dataframer and baseline) are available for download:

- Wikisource: Download outputs

- Gutenberg: Download outputs

- Wiki Real Estate: Download outputs

- Wiki Medical: Download outputs

All data (seeds, Dataframer outputs, and baseline outputs) is also available on HuggingFace.

Dataframer Avoids Typical Synthetic Data Failure Modes

The blind evaluation revealed three distinct failure modes in the baseline that Dataframer successfully avoids:

Failure Mode 1: Mode Collapse

The Problem: The baseline repeatedly generates the same topics or formulaic plot structures.

- Wiki Real Estate: “Zoning” generated 8 times, “Land Value Tax” 4 times out of 15 samples

- Gutenberg: Every story follows ship → island → ruins → beings → escape

- Wiki Medical: Duplicate “Medical Education” articles

How Dataframer Avoids It: Strategic diversity injections during the outlining phase ensure each sample explores distinct territory within the semantic space of the seeds.

Failure Mode 2: Style Drift

The Problem: The baseline introduces formatting and structural elements not present in the seed data.

- Added Markdown headers (

#,##) when inputs used plain text - Converted dense encyclopedic prose into bullet-point lists and blog-post-style summaries

- Stripped source-specific formatting artifacts (Wikisource navigation, metadata)

How Dataframer Avoids It: Iterative evaluation and editing loops keep the generated style tightly matched to the original distribution. The system continuously compares output characteristics against seed characteristics.

Failure Mode 3: Length Shrinkage

The Problem: The baseline generates summaries instead of full documents, lacking detail and nuance.

- Wikisource seeds were 35k-50k tokens; baseline outputs were 2k-5k tokens

- Inputs were dense, chapter-length texts; outputs were brief essays

How Dataframer Avoids It: The algorithm explicitly accounts for target length during outlining and generation, maintaining long-context coherence through outlining and structured revision passes.

Discussion

There are many aspects of synthetic data quality that need to be monitored: coherence, diversity, specification matching, length profiles, and more. Missing any one of these can result in an unusable dataset.

Our evaluation shows that naive prompting of frontier models collapses into repetitive, homogenized outputs. Dataframer’s agentic scaffold - with its outlining, generation, filtering, and revision stages - produces dramatically better results using the exact same underlying model.

For practitioners building synthetic data pipelines:

- Watch for mode collapse: Count unique topics/structures in your outputs

- Watch for style drift: Compare formatting and structure to your inputs

- Watch for length shrinkage: Are your outputs significantly shorter than your inputs?

The difference is stark: a system that actually satisfies your specifications versus one that collapses into repetitive, homogenized outputs.

"Dataframer offers an industry-first agentic platform to generate large structured or unstructured synthetic datasets. Interested in generating high-quality data for your use case? Just reach out to us."

Ready to Get Started?

Contact our team to learn how we can help your organization develop AI systems that meet the highest standards.